Examples first. Suppose we have two servers: node1(10.245.1.2) node2(10.245.1.3). We’re setting up an ipip network between these two hosts.

on node1(10.245.1.2) executing these commands

ip=192.168.41.0

dst_host=10.245.1.3

dst_subnet=192.168.42.0/24

modprobe -v ipip

ip l set tunl0 up

ip l set tunl0 mtu 1480

ip a add $ip/32 dev tunl0

ip r add $dst_subnet via $dst_host dev tunl0 onlink

on node2(10.245.1.3), do it again with different variables

ip=192.168.42.0

dst_host=10.245.1.2

dst_subnet=192.168.41.0/24

check they are connected through ipip. on node1

ping -c 2 192.168.42.0

PING 192.168.42.0 (192.168.42.0) 56(84) bytes of data.

64 bytes from 192.168.42.0: icmp_seq=1 ttl=64 time=1.67 ms

64 bytes from 192.168.42.0: icmp_seq=2 ttl=64 time=63.5 ms

--- 192.168.42.0 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1007ms

rtt min/avg/max/mdev = 1.670/32.592/63.514/30.922 ms

onlink: pretend that the nexthop is directly attached to this link, even if it does not match any interface prefix. This is useful when your nodes are not l2 connected.

Be aware that we aren’t just created an one to one tunnel network, we created an one to many tunnel network because we didn’t specify the tunnel device’ remote address. We can add more nodes to the network. Suppose we are adding a node3(10.245.1.4)

on node3(10.245.1.4) executing these commands

ip=192.168.43.0

modprobe -v ipip

ip l set tunl0 up

ip l set tunl0 mtu 1480

ip a add $ip/32 dev tunl0

ip r add 192.168.41.0/24 via 10.245.1.2 dev tunl0 onlink

ip r add 192.168.42.0/24 via 10.245.1.3 dev tunl0 onlink

on node1 and node2 executing

ip r add 192.168.43.0/24 via 10.245.1.4 dev tunl0 onlink

check they are connected through ipip. on both node1 and node2

ping -c 2 192.168.43.0

PING 192.168.43.0 (192.168.43.0) 56(84) bytes of data.

64 bytes from 192.168.43.0: icmp_seq=1 ttl=64 time=9.79 ms

64 bytes from 192.168.43.0: icmp_seq=2 ttl=64 time=8.47 ms

--- 192.168.43.0 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 8.475/9.135/9.795/0.660 ms

They are truely connected! Sounds interesting.

How it works

Take a look at the ipip packets. We can see that the IPIP packets encapsulate an IP header(10.245.1.2 > 10.245.1.3) upon the inner IP header(192.168.41.0 > 192.168.42.0).

$ tcpdump -vvnneSs 0 -i any host 10.245.1.2 and port not 4379

tcpdump: listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

14:10:02.157389 In 08:00:27:d6:25:ca ethertype IPv4 (0x0800), length 120: (tos 0x0, ttl 64, id 4699, offset 0, flags [DF], proto IPIP (4), length 104)

10.245.1.2 > 10.245.1.3: (tos 0x0, ttl 64, id 44390, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.41.0 > 192.168.42.0: ICMP echo request, id 31937, seq 1, length 64

14:10:02.157536 Out 08:00:27:4f:78:49 ethertype IPv4 (0x0800), length 120: (tos 0x0, ttl 64, id 60156, offset 0, flags [none], proto IPIP (4), length 104)

10.245.1.3 > 10.245.1.2: (tos 0x0, ttl 64, id 64216, offset 0, flags [none], proto ICMP (1), length 84)

192.168.42.0 > 192.168.41.0: ICMP echo reply, id 31937, seq 1, length 64

From the process of setting up the network, we can guess that the IPIP module gets inner IP packets based on route decision and thus encapsulating an outer IP header and sends out packets. And then how does it do decapsulation?

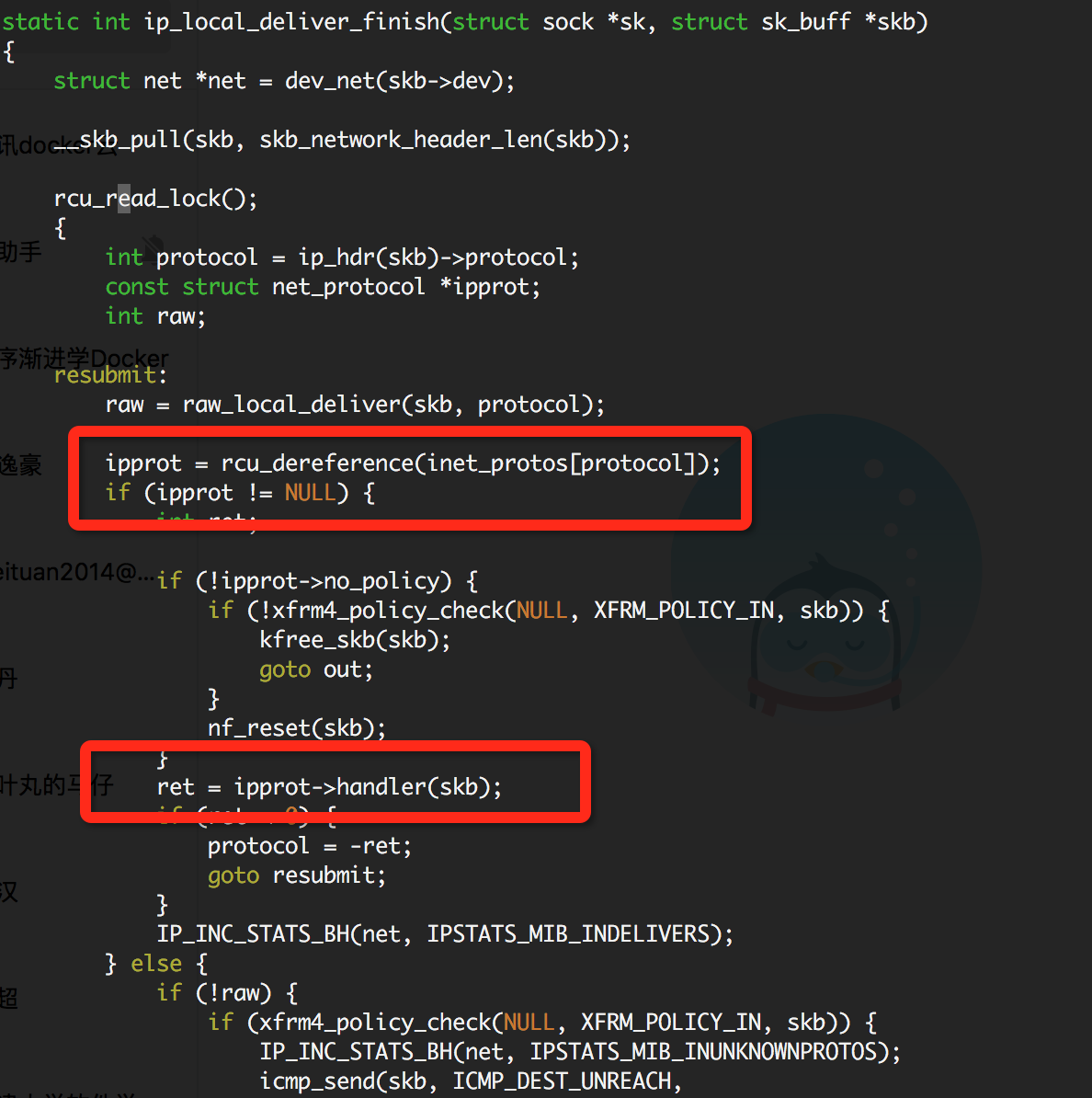

The code tells the truth. This is how ipip module receives the packets. We can see that the module gets packets because of packets’ protocol.

We can prove this by traping the kernel’s call trace to the tunnel module’s receive func

[root@TENCENT64 /data/rami/bin/perf-tools-master/kernel]# ./kprobe -s 'p:ip_tunnel_rcv'

Tracing kprobe ip_tunnel_rcv. Ctrl-C to end.

<idle>-0 [010] d.s. 8922743.125905: ip_tunnel_rcv: (ip_tunnel_rcv+0x0/0x710 [ip_tunnel])

<idle>-0 [010] d.s. 8922743.125913: <stack trace>

=> tunnel4_rcv

=> ip_local_deliver_finish

=> ip_local_deliver

=> ip_rcv_finish

=> ip_rcv

=> __netif_receive_skb_core

=> __netif_receive_skb

=> process_backlog

=> net_rx_action

=> __do_softirq

=> call_softirq

=> do_softirq

=> irq_exit

=> smp_call_function_single_interrupt

=> call_function_single_interrupt

=> cpuidle_idle_call

=> arch_cpu_idle

=> cpu_startup_entry

=> start_secondary

Multiple IPIP device

There is more details to be explained. If there are multiple IPIP devices, how does kernel choose which one to do decapsulation?

Back to the most widely used situation, i.e. the one to one IPIP network. We can create an one to one IPIP device by ip tu ad ipiptun mode ipip local 10.3.3.3 remote 10.4.4.4 ttl 64 dev eth0. If all the IPIP devices have local/remote attributes, we may guess that IPIP module choose candidate device by matching them with packets’ inner src/dst IP.

Again we can check kernel code. ipip_rcv invokes ip_tunnel_lookup and comments uppon ip_tunnel_lookup tells the truth.

/* Fallback tunnel: no source, no destination, no key, no options

Tunnel hash table:

We require exact key match i.e. if a key is present in packet

it will match only tunnel with the same key; if it is not present,

it will match only keyless tunnel.

All keysless packets, if not matched configured keyless tunnels

will match fallback tunnel.

Given src, dst and key, find appropriate for input tunnel.

*/

key: Only for GRE tunnels, IPIP is a keyless tunnel.

So IPIP packets matches appropriate input tunnel device by its inner src/dst IP.

If you intend to create multiple IPIP device without specify each one’s local/remote attributes, tunl0 will be the fallback device and it may not do decapsulation.

You can have a test of creating a new tunl1 device instead of using the netns default tunl0, the nodes won’t be reachable via IPIP network. We can set the local attribute and make it reachable again.

ip=192.168.41.0

dev=tunl1

nodeip=10.245.1.2

dst_host=10.245.1.3

dst_subnet=192.168.42.0/24

ip tun add $dev mode ipip local $nodeip

ip link set $dev up

ip a add $ip dev $dev

ip route add $dst_subnet via $dst_host dev $dev onlink